1. 软件介绍

2. 软件安装

软件主页:The MARVEL Assembler

clone 后发现目录下没有 configure 文件,而是configure.ac文件,怎么安装???

2.1. 加载所需环境

1 | source /public/home/software/.bashrc |

版本检测1

2autoreconf --version

python --version

然后运行 autoreconf 来生成 configure 文件。

2.2. 安装

1 | ## 注意此目录与git clone 的 MARVEL 目录不同 |

make install 后提示如下信息1

2

3

4---------------------------------------------------------------

Installation into /public/home/cotton/software/MARVEL-bin finished.

Don't forget to include /public/home/cotton/software/MARVEL-bin/lib.python in your PYTHONPATH.

---------------------------------------------------------------

2.3. 添加PYTHONPATH

参见:Permanently add a directory to PYTHONPATH

1

export PYTHONPATH="$PYTHONPATH:/public/home/cotton/software/MARVEL-bin/lib.python"

3. 软件使用

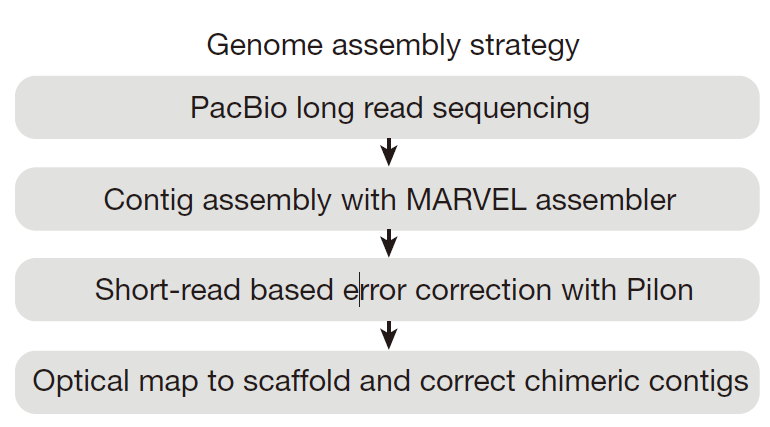

整体过程如下:

- overlap

- patch reads

- overlap (again)

- scrubbing

- assembly graph construction and touring

- optional read correction

- fasta file creation

实际运行主要经过两个过程,READ PATCHING PHASE 和 ASSEMBLY PHASE,即reads的处理阶段(DBprepare.py)和组装阶段(do.py);

3.1. 初始化数据库: DBprepare.py

1 | /public/home/cotton/software/MARVEL-bin/scripts/DBprepare.py ECOL p6.25x.fasta |

DBprepare.py 实际上是一个集成的python脚本,里面包含大约4步MARVEL程序 (FA2db,DBsplit,DBdust 和 HPCdaligner)。

运行完成将生成 ECOL.db,两个隐藏文件.ECOL.idx 和 .ECOL.bps ,两个plan后缀的文件;

3.2. 组装: do.py

1 | ##!/usr/bin/env python |

4. 软件运行实例

4.1. 分步运行

The axolotl genome and the evolution of key tissue formation regulators 基因组组装过程,来源于MARVEL的Github主页的 MARVEL/examples/axolotl/README (初始化数据库时经过Fix的修正过程)1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108This file contains the steps we took to assemble the axolotl genome.

########## READ PATCHING PHASE

## create initial database

FA2db -v -x 2000 -b AXOLOTL -f axoFiles.txt

## split database into blocks

DBsplit AXOLOTL

## create dust track

DBdust AXOLOTL

## create daligner and merge plans, replace SERVER and PORT

HPCdaligner -v -t 100 -D SERVER:PORT -r1 -j16 --dal 32 --mrg 32 -o AXOLOTL AXOLOTL

################################################################## DBprepare.py 脚本可运行上诉4步 ######################################################################################################

## start dynamic repeat masking server on SERVER:PORT, replace PORT (可跳过)

DMserver -t 16 -p PORT -C AXOLOTL 40 AXOLOTL_CP

################################################################################################################################################################################################################################

## AXOLOTL.daligner.plan 和 AXOLOTL.merge.plan 是 DBprepare.py 生成的结果,内容是一些运行LAmerge和daligner程序的命令

## run daligner to create initial overlaps

AXOLOTL.daligner.plan

## after all daligner jobs are finshied the dynamic repeat masker has to be shut down (可跳过)

DMctl -h HOST -p PORT shutdown

###### run LAmerge to merge overlap blocks

AXOLOTL.merge.plan

##############################################################################################################################################################################

## create quality and trim annotation (tracks) for each overlap block for each database block

LAq -b <block> AXOLOTL AXOLOTL.<block>.las

TKmerge -d AXOLOTL q

TKmerge -d AXOLOTL trim

## run LAfix to patch reads based on overlaps for each database block

LAfix -c -x 2000 AXOLOTL AXOLOTL.<block>.las AXOLOTL.<block>.fixed.fasta

########## ASSEMBLY PHASE

## create a new database with the fixed reads

FA2db -v -x 2000 -c AXOLOTL_FIX AXOLOTL.*.fixed.fasta

## split database into blocks

DBsplit AXOLOTL_FIX

## combine repeat tracks maskr and maskc that were created during read patching phase

TKcombine AXOLOTL_FIX mask maskr maskc

## create daligner and merge plans, replace SERVER and PORT

HPCdaligner -v -t 100 -D SERVER:PORT -m mask -r2 -j16 --dal 32 --mrg 32 -o AXOLOTL_FIX AXOLOTL_FIX

## start dynamic repeat masking server on SERVER:PORT, replace PORT

DMserver -t 16 -p PORT -C AXOLOTL_FIX 40 AXOLOTL_CP

###### run daligner to create overlaps

AXOLOTL_FIX.dalign.plan

## after all daligner jobs are finshied the dynamic repeat masker has to be shut down

DMctl -h HOST -p PORT shutdown

###### run LAmerge to merge overlap blocks

AXOLOTL_FIX.merge.plan

###### SCRUBBING PHASE

## repair alignments that prematurely stopped due to left-over errors in the reads for each database block

LAstitch -f 50 AXOLOTL_FIX AXOLOTL_FIX.<block>.las AXOLOTL_FIX.<block>.stitch.las

## create quality and trim annotation for each database block

LAq -T trim0 -s 5 -b <block> AXOLOTL_FIX AXOLOTL_FIX.<block>.stitch.las

TKmerge -d AXOLOTL_FIX q

TKmerge -d AXOLOTL_FIX trim0

## create a repeat annotation for each database block

LArepeat -c <coverage> -l 1.5 -h 2.0 -b <block> AXOLOTL_FIX AXOLOTL_FIX.<block>.stitch.las

TKmerge -d AXOLOTL_FIX repeats

## merge duplicate & overlapping annotation repeat annotation and masking server output

TKcombine {db} frepeats repeats maskc maskr

## remove gaps (ie. due to chimeric reads, ...) for each database block

LAgap -s 100 -t trim0 AXOLOTL_FIX AXOLOTL_FIX.<block>.stitch.las AXOLOTL_FIX.<block>.gap.las

## recalculate the trim track based on the cleaned up gaps for each database block

LAq -u -t trim0 -T trim1 -b <block> AXOLOTL_FIX AXOLOTL_FIX.<block>.gap.las

TKmerge -d AXOLOTL_FIX trim1

## filter repeat induced alignments and try to resolve repeat modules for each database block

LAfilter -p -s 100 -n 300 -r frepeats -t trim1 -o 1000 -u 0 AXOLOTL_FIX AXOLOTL_FIX.<block>.gap.las AXOLOTL_FIX.<block>.filtered.las

## not much is left now, so we can merge everything into a single las file

LAmerge -S filtered AXOLOTL_FIX AXOLOTL_FIX.filtered.las

## create overlap graph

mkdir -p components

OGbuild -t trim1 -s -c 1 AXOLOTL_FIX AXOLOTL_FIX.filtered.las components/AXOLOTL_FIX

## tour the overlap graph and create contigs paths for each components/*.graphml

OGtour.py -c AXOLOTL_FIX <component.graphml>

## create contig fasta files for each components/*.paths

tour2fasta.py -t trim1 AXOLOTL_FIX <component.graphml> <component.paths>

4.2. 集合运行

1 | source /public/home/software/.bashrc |

5. 疑问参数

5.1. 线程数目参数

-j参数表示线程数,需为2的幂次。

5.2. HPCdaligner 的 -D SERVER:PORT

解释见:HPCdaligner parameter <-D host:port> ##7,即重复序列多的基因组组装需要设置此参数,如果不是则不需要设置此参数和运行随后的 DMserver 步骤。如下即可:1

HPCdaligner -v -t 100 -r1 -j16 --dal 32 --mrg 32 -o AXOLOTL AXOLOTL

5.3. DMserver 的 -p PORT 和 expected.coverage

Dynamic repeat Masking server主要目的在于降低大基因组组装对于计算机CPU和内存的需求,

While preliminary calculations for computational time and storage space estimated over multiple millions of CPU hours and >2 PB of storage for one daligner run, the usage of a dynamic repeat masking server (below) reduced this dramatically to 150,000 CPU hours and 120 Tb of storage space for the complete pipeline.